Ultralytics, the computer vision company which the responsible for the develoment of Yolo object detection models, hs recently lanched the Yolov12 series of object detection models and we are going to try these model. So, In this step by step tutorial we are going to to develop a Web App for the Object Detection using Yolov12, OpenCV and Gradio Framework and check the performace of Yolov12.

Let's get started

Prerequisites

Before we start the development of our object detection web application. We need to install the prerequisites/dependencies or the python packages which we need to develop the application. Here is the list of package which we need to install -

- torch==2.2.2

- torchvision==0.17.2

- timm==1.0.14

- albumentations==2.0.4

- onnx==1.14.0

- onnxruntime==1.15.1

- pycocotools==2.0.7

- PyYAML==6.0.1

- scipy==1.13.0

- gradio==4.44.1

- opencv-python==4.9.0.80

- psutil==5.9.8

- py-cpuinfo==9.0.0

- huggingface-hub==0.23.2

- safetensors==0.4.3

- numpy==1.26.4

- thop

Note - In order to install these python package make sure that your internet should be up and running. you can install these packages with a single pip command

pip install -r /path/to/requirements.txt

Hopefully if everything goes well you will see that pip will fetch all the necessary files and install all the packages.

Application Code

import gradio as gr

import cv2

import tempfile

from ultralytics import YOLO

def yolov12_inference(image, video, model_id, image_size, conf_threshold):

model = YOLO(model_id)

if image:

results = model.predict(source=image, imgsz=image_size, conf=conf_threshold)

annotated_image = results[0].plot()

return annotated_image[:, :, ::-1], None

else:

video_path = tempfile.mktemp(suffix=".webm")

with open(video_path, "wb") as f:

with open(video, "rb") as g:

f.write(g.read())

cap = cv2.VideoCapture(video_path)

fps = cap.get(cv2.CAP_PROP_FPS)

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

output_video_path = tempfile.mktemp(suffix=".webm")

out = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*'vp80'), fps, (frame_width, frame_height))

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

results = model.predict(source=frame, imgsz=image_size, conf=conf_threshold)

annotated_frame = results[0].plot()

out.write(annotated_frame)

cap.release()

out.release()

return None, output_video_path

def yolov12_inference_for_examples(image, model_path, image_size, conf_threshold):

annotated_image, _ = yolov12_inference(image, None, model_path, image_size, conf_threshold)

return annotated_image

def app():

with gr.Blocks():

with gr.Row():

with gr.Column():

image = gr.Image(type="pil", label="Image", visible=True)

video = gr.Video(label="Video", visible=False)

input_type = gr.Radio(

choices=["Image", "Video"],

value="Image",

label="Input Type",

)

model_id = gr.Dropdown(

label="Model",

choices=[

"yolov12n.pt",

"yolov12s.pt",

"yolov12m.pt",

"yolov12l.pt",

"yolov12x.pt",

],

value="yolov12m.pt",

)

image_size = gr.Slider(

label="Image Size",

minimum=320,

maximum=1280,

step=32,

value=640,

)

conf_threshold = gr.Slider(

label="Confidence Threshold",

minimum=0.0,

maximum=1.0,

step=0.05,

value=0.25,

)

yolov12_infer = gr.Button(value="Detect Objects")

with gr.Column():

output_image = gr.Image(type="numpy", label="Annotated Image", visible=True)

output_video = gr.Video(label="Annotated Video", visible=False)

def update_visibility(input_type):

image = gr.update(visible=True) if input_type == "Image" else gr.update(visible=False)

video = gr.update(visible=False) if input_type == "Image" else gr.update(visible=True)

output_image = gr.update(visible=True) if input_type == "Image" else gr.update(visible=False)

output_video = gr.update(visible=False) if input_type == "Image" else gr.update(visible=True)

return image, video, output_image, output_video

input_type.change(

fn=update_visibility,

inputs=[input_type],

outputs=[image, video, output_image, output_video],

)

def run_inference(image, video, model_id, image_size, conf_threshold, input_type):

if input_type == "Image":

return yolov12_inference(image, None, model_id, image_size, conf_threshold)

else:

return yolov12_inference(None, video, model_id, image_size, conf_threshold)

yolov12_infer.click(

fn=run_inference,

inputs=[image, video, model_id, image_size, conf_threshold, input_type],

outputs=[output_image, output_video],

)

gr.Examples(

examples=[

[

"ultralytics/assets/bus.jpg",

"yolov12s.pt",

640,

0.25,

],

[

"ultralytics/assets/zidane.jpg",

"yolov12x.pt",

640,

0.25,

],

],

fn=yolov12_inference_for_examples,

inputs=[

image,

model_id,

image_size,

conf_threshold,

],

outputs=[output_image],

cache_examples='lazy',

)

gradio_app = gr.Blocks()

with gradio_app:

gr.HTML(

"""

<h1 style='text-align: center'>

YOLOv12: Attention-Centric Real-Time Object Detectors

</h1>

""")

with gr.Row():

with gr.Column():

app()

if __name__ == '__main__':

gradio_app.launch()Running Gradio App

In order to run the Gradio object detection web application please execute the below mention command in the Command Prompt or in Terminal -

>> python app.py

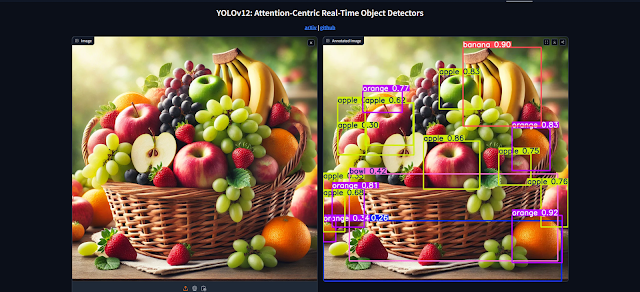

Now if everything goes well then you will be able to see your object detection web app running in your default web browser as shown in the below mentioned image or you can also run it inside any browser of you choice through localhost. You can see the localhost ip inside Command Prompt or in Terminal applications.

Figure-1: Web App Interface

Comments

Post a Comment